This week, at the KDD (Knowledge Discovery and Data Mining) conference, we (as in Microsoft SQL Server Data Mining team) presented the Table Analysis Tools for the Cloud, a preview for a technology that enables anybody to play with some of the Microsoft’s data mining tools, without any bulky downloads and with zero configuration effort.

Around May this year I practically entered some sort of sabbatical: 3 months to work on an incubation project of my choice (yes, the Microsoft SQL Server organization does this kind of things! if it sounds appealing, check out our recruiting site or, even better, contact directly our SQL Server Data Mining recruiter, Melsa Clarke - melsac AT microsoft DOT com). With some help from Jamie, various management levels and some nice guys in the SQL Server Data Services team, I gathered the infrastructure for a Software as a Service incubation and set up a web incarnation of the Table Analysis Tools add-in for Excel.

Now it is up and running. The entry page is at sqlserverdatamining.com/cloud, so if I got you bored already and you don’t want to read the rest of this stuff, go ahead and browse that page.

What it is

TAT Cloud is a set of canned data mining tasks that you can use without having SQL Server installed on your machine. It consists of encapsulations of some common data mining problems, such as detecting key influencers, forecasting, generating predictive scorecards or doing market basket analysis. The tasks can be executed directly from your browser: just go to the web page, upload your data (in CSV) format and run a tool from the toolbar. Even better, the tasks can be executed directly from Excel. For this, however, you will need to have Excel 2007 and install an add-in which can be downloaded from here.

All tasks work on a table (Excel table or a table in CSV format that you upload to the web interface). All tasks produce reports that can be used to learn more about the analyzed data.

Here is a complete list of features:

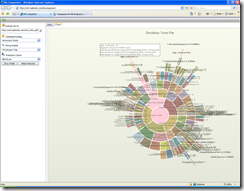

- - Analyze Key Influencers: it detects the columns that impact your target column. It presents a report of those values in other columns that correlate strongly with values in your target column.

- - Detect Categories (clustering, for data miners) — identifies groups of table rows that share similar characteristics. A categories report is generated, which details the characteristics of each category

- - Fill From Example — to some extent, similar to Excel’s Autofill feature: it learns from a few examples and extends the learned patterns to the remaining rows in the table

- - Forecasting — analyzes vertical series of numeric data, detects periodicity, trends and correlations between series and produces a forecast for those series

- - Highlight Exceptions — finds the interesting (or unusual, or out-of-ordinary) rows in your table

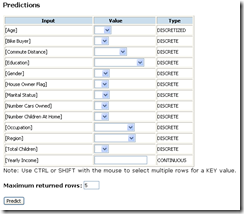

- - Scenario Analysis — What-If and Goal-Seek tools based on a probabilistic model built on top of your data.

- - Prediction Calculator — a tool for generating prediction scorecards

- - Market Basket Analysis — analyzes transaction tables to identify groups of items that appear together in transactions

All features work from Excel. However, not all the features are implemented in the web interface. They will show up eventually!

A really nice presentation of how to use the tools was written by Brent Ozar on SQLServerPedia. Thanks, Brent!

How it works

Your data (CSV file or Excel spreadsheet) is uploaded to the web service site. There, Analysis Services crunches it and produces the reports you get either in the browser or in a different spreadsheet. The data and the mining model used for analyzing it are deleted immediately after processing. If something bad happens and your session does not conclude successfully (fancy wording for "if it crashes") then both data and models are removed automatically after 15-20 minutes.

You will notice in the Excel add-in (as well as in the web interface) a strange IP address, 131.107.181.99 — this is IP of the service. It will be changed to something cleaner very soon.

Excel uses HTTPS to connect to the service, in an effort to protect your data. However, you should not use this technology on sensitive data.

What it is not

This is not an official shipping Microsoft technology. It is actually less than even a beta. It may crash, it may produce incorrect results, it may be shot down at any time. BTW, I would appreciate if you posted note here or on the Microsoft data mining forums if this happens (particularly about crashes, I should probably know if it gets shut down)!

What next

Well, try the tools: sqlserverdatamining.com/cloud . I recommend downloading the Excel 2007 add-in, rather than using the web application, as it is more functional. Post any questions you might have on the Microsoft data mining forums. And check out this blog periodically for announcements (typically new additions to the web interface) or for more details on how this stuff works

Tags: Cloud, DMX, Excel Add-ins // 12 Comments »

![]() )

)